Check out other books by Al Sweigart, free online or available for purchase:

...and other books as well! Or register for the online video course. You can also donate to support the author directly.

In the previous chapter, we covered using memoization to optimize recursive functions. This chapter explores a technique called tail call optimization, which is a feature provided by a compiler or interpreter to avoid stack overflows. Tail call optimization is also called tail call elimination, or tail recursion elimination.

This chapter is meant to explain tail call optimization, not to endorse it. I would go so far as to recommend never using tail call optimization. As you’ll see, rearranging your function’s code to use tail call optimization often makes it far less understandable. You should consider tail call optimization to be more of a hack or workaround to make recursion work when you shouldn’t be using a recursive algorithm in the first place. Remember, a complex recursive solution is not automatically an elegant solution; simple coding problems should be solved with simple non-recursive methods.

Many implementations of popular programming languages don’t even offer tail call optimization as a feature. These include interpreters and compilers for Python, JavaScript, and Java. However, tail call optimization is a technique you should become familiar with in case you come across it in the code projects you work on.

To make use of tail call optimization, a function must use tail recursion. In tail recursion, the recursive function call is the last action of a recursive function. In code, this looks like a return statement returning the results of a recursive call.

To see this in action, recall the factorialByRecursion.py and factorialByRecursion.html programs in Chapter 2. These programs calculated the factorial of an integer; for instance, 5! is equivalent to 5 × 4 × 3 × 2 × 1, or 120. These calculations can be performed recursively because factorial(n) is equivalent to n * factorial(n - 1), with the base case of n == 1 returning 1.

Let’s rewrite these programs to use tail recursion. The following factorialTailCall.py program has a factorial() function that uses tail recursion:

Python

def factorial(number, accum=1):

if number == 1:

# BASE CASE

return accum

else:

# RECURSIVE CASE

return factorial(number - 1, accum * number)

print(factorial(5))The factorialTailCall.html program has the equivalent JavaScript code:

JavaScript

<script type="text/javascript">

function factorial(number, accum=1) {

if (number === 1) {

// BASE CASE

return accum;

} else {

// RECURSIVE CASE

return factorial(number - 1, accum * number);

}

}

document.write(factorial(5));

</script>Notice that the factorial() function’s recursive case ends with a return statement returning the results of a recursive call to factorial(). To allow the interpreter or compiler to implement tail call optimization, the last action a recursive function makes must be to return the results of the recursive call. No instructions can occur between making the recursive call and the return statement. The base case returns the accum parameter. This is the accumulator, explained in the next section.

To understand how tail call optimization works, remember from Chapter 1 what happens when a function is called. First, a frame object is created and stored on the call stack. If the function call calls another function, another frame object is created and placed on top of the first frame object on the call stack. When a function returns, your program automatically deletes the frame object from the top of the stack.

A stack overflow happens when too many function calls are made without returning, causing the number of frame objects to exceed the capacity of the call stack. This capacity is 1,000 function calls for Python and about 10,000 for JavaScript programs. While these amounts are more than enough for typical programs, recursive algorithms could exceed this limit and cause a stack overflow that crashes your program.

Recall from Chapter 2 that a frame object stores the local variables in the function call as well as the return address of the instruction to return to when the function finishes. However, if the last action in the recursive case of a function is to return the results of a recursive function call, there’s no need to retain the local variables. The function does nothing involving the local variables after the recursive call, so the current frame object can be deleted immediately. The next frame object’s return address information can be the same as the old deleted frame object’s return address.

Since the current frame object is deleted instead of retained on the call stack, the call stack never grows in size and can never cause a stack overflow!

Recall from Chapter 1 that all recursive algorithms can be implemented with a stack and a loop. Since tail call optimization removes the need for a call stack, we are effectively using recursion to simulate a loop’s iterative code. However, earlier in this book I stated that the problems suitable for recursive solutions involve a tree-like data structure and backtracking. Without a call stack, no tail recursive function could possibly do any backtracking work. In my view, every algorithm that can be implemented with tail recursion would be easier and more readable to implement with a loop instead. There’s nothing automatically more elegant about using recursion for recursion’s sake.

The disadvantage of tail recursion is that it requires rearranging your recursive function so that the last action is returning the recursive call’s return value. This can make our recursive code even more unreadable. Indeed, the factorial() function in this chapter’s factorialTailCall.py and factorialTailCall.html programs is a bit harder to comprehend than the version in Chapter 2’s factorialByRecursion.py and factorialByRecursion.html programs.

In the case of our tail call factorial() function, a new parameter named accum follows the calculated product as recursive function calls are made. This is known as an accumulator parameter, and it keeps track of a partial result of a calculation that would otherwise have been stored in a local variable. Not all tail recursive functions use accumulators, but they act as a workaround for tail recursion’s inability to use local variables after the final recursive call. Notice that in factorialByRecursion.py’s factorial() function, the recursive case was return number * factorial(number - 1). The multiplication happens after the factorial(number - 1) recursive call. The accum accumulator takes the place of the number local variable.

Also notice that the base case for factorial() no longer returns 1; rather, it returns the accum accumulator. By the time factorial() is called with number == 1 and the base case is reached, accum stores the final result to return. Adjusting your code to use tail call optimization often involves changing the base case to return the accumulator’s value.

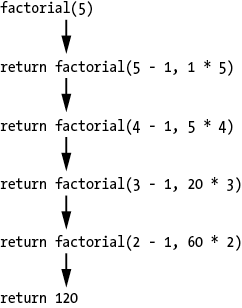

You can think of the factorial(5) function call as transforming into the following return, as shown in Figure 8-1.

Figure 8-1: The process of transformations that factorial(5) makes to the integer 120

Rearranging your recursive calls as the last action in the function and adding accumulators can make your code even harder to understand than typical recursive code. But that’s not the only downside of tail recursion, as we’ll see in the next section.

Tail recursive functions require rearranging their code to make them suitable for the tail call optimization feature of the compiler or interpreter. However, not all compilers and interpreters offer tail call optimization as a feature. Notably, CPython (the Python interpreter downloaded from https://python.org) does not implement tail call optimization. Even if you write your recursive functions with the recursive call as the last action, it will still cause stack overflows after enough function calls are made.

Not only that, but CPython will likely never have tail call optimization as a feature. Guido van Rossum, the creator of the Python programming language, has explained that tail call optimization can make programs harder to debug. Tail call optimization removes frame objects from the call stack, which in turn removes the debugging information that frame objects can provide. He also points out that once tail call optimization is implemented, Python programmers will begin to write code that depends on the feature, and their code won’t run on non-CPython interpreters that don’t implement tail call optimization.

Finally, and I concur, van Rossum disagrees with the idea that recursion is a fundamentally important part of day-to-day programming. Computer scientists and mathematicians tend to place recursion on a pedestal. But tail call optimization is simply a workaround hack to make some recursive algorithms actually workable, rather than simply crashing with a stack overflow.

While CPython doesn’t feature tail call optimization, this doesn’t mean another compiler or interpreter that implements the Python language couldn’t have tail call optimization. Unless tail call optimization is explicitly a part of a programming language specification, it is not a feature of a programming language, but rather of individual compilers or interpreters of a programming language.

The lack of tail call optimization is not unique to Python. The Java compiler also doesn’t support tail call optimization. Tail call optimization is a part of the ECMAScript 6 version of JavaScript; however, as of 2022, only the Safari web browser’s implementation of JavaScript actually supports it. One way to determine whether your programming language’s compiler or interpreter implements this feature is to write a tail recursive factorial function and try to calculate the factorial of 100,000. If the program crashes, tail call optimization is not implemented.

Personally, I take the stance that the tail recursion technique should never be used. As stated in Chapter 2, any recursive algorithm can be implemented with a loop and a stack. Tail call optimization prevents stack overflows by effectively removing the use of the call stack. Therefore, all tail recursive algorithms can be implemented with a loop alone. Since the code for loops is much simpler than a recursive function, a loop should be used wherever tail call optimization could be employed.

Additionally, potential problems exist even if tail call optimization is implemented. Since tail recursion is possible only when the last action of a function is returning the recursive call’s return value, it’s impossible to do tail recursion for algorithms that require two or more recursive calls. For example, take the Fibonacci sequence algorithm calls fibonacci(n - 1) and fibonacci(n - 2). While tail call optimization can be performed for the latter recursive call, the first recursive call will cause a stack overflow for large-enough arguments.

Let’s examine some of the recursive functions demonstrated earlier in this book to see if they are good candidates for tail recursion. Keep in mind that because Python and JavaScript do not actually implement tail call optimization, these tail recursive functions will still result in a stack overflow error. These case studies are for demonstration purposes only.

The first example is the program to reverse a string that we made in Chapter 3. The Python code for this tail recursive function is in reverseStringTailCall.py:

Python

❶ def rev(theString, accum=''):

if len(theString) == 0:

# BASE CASE

❷ return accum

else:

# RECURSIVE CASE

head = theString[0]

tail = theString[1:]

❸ return rev(tail, head + accum)

text = 'abcdef'

print('The reverse of ' + text + ' is ' + rev(text))The JavaScript equivalent is in reverseStringTailCall.html:

JavaScript

<script type="text/javascript">

❶ function rev(theString, accum='') {

if (theString.length === 0) {

// BASE CASE

❷ return accum;

} else {

// RECURSIVE CASE

let head = theString[0];

let tail = theString.substring(1, theString.length);

❸ return rev(tail, head + accum);

}

}

let text = "abcdef";

document.write("The reverse of " + text + " is " + rev(text) + "<br />");

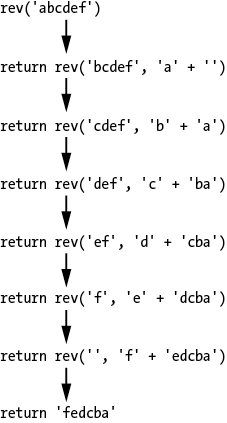

</script>The conversion of the original recursive functions in reverseString.py and reverseString.html involves adding an accumulator parameter. The accumulator, named accum, is set to the blank string by default if no argument is passed for it ❶. We also change the base case from return '' to return accum ❷, and the recursive case from return rev(tail) + head (which performs a string concatenation after the recursive call returns) to return rev(tail, head + accum) ❸. You can think of the rev('abcdef') function call as transforming into the following return, as shown in Figure 8-2.

By effectively using the accumulator as a local variable shared across function calls, we can make the rev() function tail recursive.

Figure 8-2: The process of transformations that rev('abcdef') makes to the string fedcba

Some recursive functions naturally end up using the tail recursion pattern. If you look at the findSubstringRecursive() function in the findSubstring.py and findSubstring.html programs in Chapter 2, you’ll notice that the last action for the recursive case is to return the value of the recursive function call. No adjustments are needed to make this function tail recursive.

The exponentByRecursion.py and exponentByRecursion.html programs, also from Chapter 2, are not good candidates for tail recursion. These programs have two recursive cases for when the n parameter is even or odd. This is fine: as long as all the recursive cases return the return value of the recursive function call as their last action, the function can use tail call optimization.

However, notice the Python code for the n is even recursive case:

Python

--snip--

elif n % 2 == 0:

# RECURSIVE CASE (when n is even)

result = exponentByRecursion(a, n / 2)

return result * result

--snip--And notice the equivalent JavaScript recursive case:

JavaScript

--snip--

} else if (n % 2 === 0) {

// RECURSIVE CASE (when n is even)

result = exponentByRecursion(a, n / 2);

return result * result;

--snip--This recursive case does not have the recursive call as its last action. We could get rid of the result local variable, and instead call the recursive function twice. This would reduce the recursive case to the following:

--snip--

return exponentByRecursion(a, n / 2) * exponentByRecursion(a, n / 2)

--snip--However, now we have two recursive calls to exponentByRecursion(). Not only does this needlessly double the amount of computation the algorithm performs, but the last action performed by the function is to multiply the two return values. This is the same problem the recursive Fibonacci algorithm has: if the recursive function has multiple recursive calls, at least one of those recursive calls can’t be the last action of the function.

To determine whether an integer is odd or even, you can use the % modulus operator. The expression number % 2 == 0 will be True if number is even, and False if number is odd. However, if you’d prefer to overengineer a more “elegant” recursive algorithm, you can implement the following isOdd() function in isOdd.py (the rest of isOdd.py is presented later in this section):

Python

def isOdd(number):

if number == 0:

# BASE CASE

return False

else:

# RECURSIVE CASE

return not isOdd(number - 1)

print(isOdd(42))

print(isOdd(99))

--snip--The JavaScript equivalent is in isOdd.html:

JavaScript

<script type="text/javascript">

function isOdd(number) {

if (number === 0) {

// BASE CASE

return false;

} else {

// RECURSIVE CASE

return !isOdd(number - 1);

}

}

document.write(isOdd(42) + "<br />");

document.write(isOdd(99) + "<br />");

--snip--We have two base cases for isOdd(). When the number argument is 0, the function returns False to indicate even. For simplicity, our implementation of isOdd() works for only positive integers. The recursive case returns the opposite of isOdd(number - 1).

You can see why this works with an example: when isOdd(42) is called, the function can’t determine if 42 is even or odd but does know that the answer is the opposite of whether 41 is odd or even. The function will return not isOdd(41). This function call, in turn, returns the opposite Boolean value of isOdd(40), and so on, until isOdd(0) returns False. The number of recursive function calls determines the number of not operators that act on return values before the final return value is returned.

However, this recursive function results in stack overflows for large-number arguments. Calling isOdd(100000) results in 100,001 function calls without returning—which far exceeds the capacity of any call stack. We can rearrange the code in the function so that the last action of the recursive case is returning the results of the recursive function call, making the function tail recursive. We do this in isOddTailCall() in isOdd.py. Here is the rest of the isOdd.py program:

Python

--snip--

def isOddTailCall(number, inversionAccum=False):

if number == 0:

# BASE CASE

return inversionAccum

else:

# RECURSIVE CASE

return isOddTailCall(number - 1, not inversionAccum)

print(isOddTailCall(42))

print(isOddTailCall(99))The JavaScript equivalent is in the rest of isOdd.html:

JavaScript

--snip--

function isOddTailCall(number, inversionAccum) {

if (inversionAccum === undefined) {

inversionAccum = false;

}

if (number === 0) {

// BASE CASE

return inversionAccum;

} else {

// RECURSIVE CASE

return isOddTailCall(number - 1, !inversionAccum);

}

}

document.write(isOddTailCall(42) + "<br />");

document.write(isOddTailCall(99) + "<br />");

</script>If this Python and JavaScript code is run by an interpreter that supports tail call optimization, calling isOddTailCall(100000) won’t result in a stack overflow. However, tail call optimization is still much slower than simply using the % modulus operator to determine oddness or evenness.

If you think recursion, with or without tail recursion, is an incredibly inefficient way to determine whether a positive integer is odd, you are absolutely correct. Unlike iterative solutions, recursion can fail from stack overflows. Adding tail call optimization to prevent stack overflows doesn’t fix the efficiency flaws of using recursion inappropriately. As a technique, recursion is not automatically better or more sophisticated than iterative solutions. And tail recursion is never a better approach than a loop or other simple solution.

Tail call optimization is a feature of a programming language’s compiler or interpreter that can be employed on recursive functions specifically written to be tail recursive. Tail recursive functions return the return value of the recursive function call as the last action in the recursive case. This allows the function to delete the current frame object and prevent the call stack from growing as new recursive function calls are made. If the call stack doesn’t grow, the recursive function can’t possibly cause a stack overflow.

Tail recursion is a workaround that allows some recursive algorithms to work with large arguments without crashing. However, this approach requires rearranging your code and possibly adding an accumulator parameter. This could make your code harder to understand. You may likely find that sacrificing code readability is not worth using a recursive algorithm over an iterative one.

Stack Overflow (the website, not the programming error) has a detailed discussion about the basics of tail recursion at https://stackoverflow.com/questions/33923/what-is-tail-recursion.

Van Rossum wrote about his decision not to use tail recursion in two blog posts at https://neopythonic.blogspot.com.au/2009/04/tail-recursion-elimination.html and https://neopythonic.blogspot.com.au/2009/04/final-words-on-tail-calls.html.

Python’s standard library includes a module called inspect that allows you to view the frame objects on the call stack as a Python program is running. The official documentation for the inspect module is at https://docs.python.org/3/library/inspect.html, and a tutorial on Doug Hellmann’s Python 3 Module of the Week blog is at https://pymotw.com/3/inspect.

Test your comprehension by answering the following questions: